Computer Vision Lighting: Reegeel

Computer Vision software for automatic placement of outdoor building lighting for Reegeel clients.

- Industry

- Retail & Distribution

Customer goal

When choosing the exterior finish of a house, wallpaper for a bedroom, or a fence for a garden, we often face the imagination gap problem: it is difficult for us to predict how satisfied we will be with the choice of materials and colors at the end of the repair.

A similar problem arises when you are planning outdoor lighting: on the eve of the New Year or Halloween, we carefully consider how we will decorate the house and how much festive illumination will need to surprise our neighbors, and then it turns out that we lack one light string for a perfect picture.

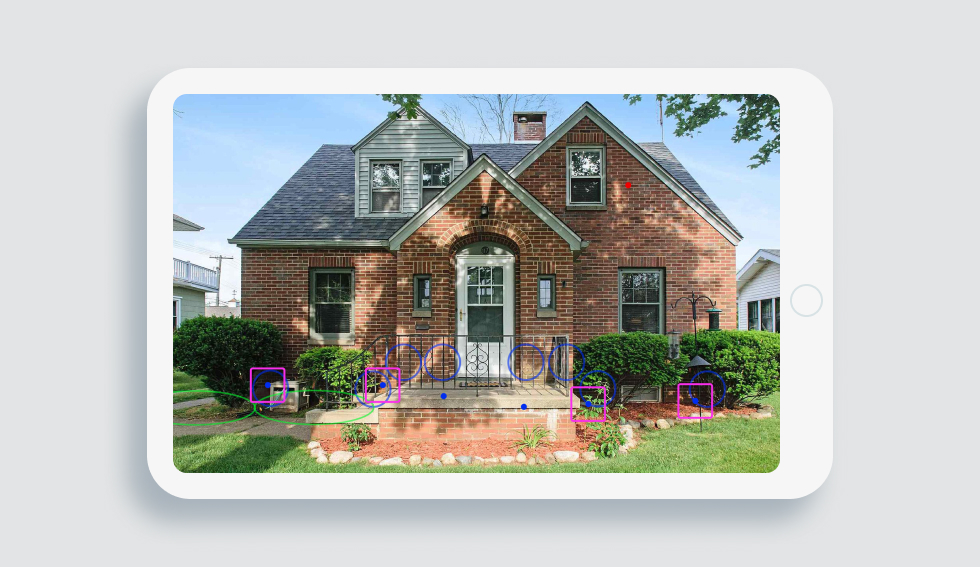

To avoid this problem and make outdoor lighting as thoughtful as possible without wasting time and nerves, Reegeel developed a service for its users to automatically generate outdoor lighting plans for buildings based on Computer Vision. Thanks to it, users can upload a photo of their house to the system, get approximately five lighting options, choose the one they like, then pay and wait for their order.

When Reegeel came to us, they had already worked with two teams. However, they were still not satisfied with the accuracy of the results of the algorithms. They asked us to analyze the existing solution and come up with suggestions for improvements.

Solution

Our team analyzed the client’s current solution to understand why the algorithm produces inaccurate results. We had several hypotheses, the main of which was the problem with the quality of the initial data that was used to create the dataset and train the model.

One of the main tasks of the model used in the solution was image segmentation. The dataset was created based on images of buildings that are freely available on the Internet. The data was labeled into 11 classes. According to the documentation provided, the accuracy according to the IOU score (the standard metric used to evaluate data segmentation) ranged from 50% to 82%, depending on the class.

Semantic segmentation

We analyzed approximately 5% of randomly selected photos from the dataset provided by the customer. After analysis, we were able to identify the following problems with the data used to train the model:

- low photo resolution (less than 1024 px on the smaller side);

- lack of unified labeling rules (for example, somewhere the houses in the background are marked, somewhere not);

- syntax errors (for example, the wall is marked as a road);

- photos were taken mainly in the USA (architecture has its specifics);

- all photos were taken primarily in summer;

- low quality of the mask.

Obviously, the result of segmentation can be significantly improved by better processing the data used to train the model.

To do this, our team has developed a plan of actions required to create a high-quality dataset and train the model once again:

- Determine the target group of images that will be as similar as possible to client images and have a diverse context – time of year, angle, type of architecture. Collect them in a test dataset in the amount of about 100 pieces;

- Based on the test dataset, determine the main characteristics on which the training data set should be developed;

- Develop data labeling rules and determine the quality of detail (The quality of detail affects the labeling speed and the final values of the IOU metric. That is, for values of 80%, you can mark up faster and less efficiently; for values closer to 90%, the markup quality should be higher);

- Form and label a dataset to train the model one more time.

In our experience, to obtain more than 80% IOU accuracy of results for all 11 classes, we need from 3500 to 5000 well-labeled images. For ensuring the highest quality of entire solution output, we prepared an automatic script for validating the accuracy and tested it on a reference dataset of 100 qualitatively labeled images.

Now, the client can use the script to prevent poor performance of the solution. Our Solution Architects also analyzed the current code and, together with the team, drew up a detailed roadmap for the further development of the project to obtain maximum results at minimum cost.

The successfully completed Discovery Phase has already brought value, reduced risks and became the great foundation for the future development of the client’s project.

By addressing data quality issues and retraining the model, the service now provides more precise recommendations, enhancing customer satisfaction. With an automatic validation script, errors are minimized, saving time and resources.

With an automatic validation script, errors are minimized, saving time and resources.

Solution Architects’ detailed plan ensures maximum results at minimum cost, optimizing profitability.